Potential Impacts of Large Language Models on Engineering Management

Everyone is talking about AI and Large Language Models (i.e GPT), and how they are reshaping the roles of software engineers, designers, customer support, and more. The narrative seems to overlook the impact of AI on management roles.

I took some time to try and break down some potential areas of disruption in management due to AI and highlight the caveats.

Know your people

In a previous article, “No Shortcuts: Know Your People,” I discussed the fundamental aspect of management, which involves a deep understanding of the people you work with. As AI evolves, team members will do more, this means also a lot more information that their manager needs to process and analyze to ensure good growth and performance.

LLMs can help managers to analyze their team members’ activity, spot repeating patterns, and even monitor progression. This seems like a stretch but a mini-version of this already exists. Products like Multitudes allow you to connect multiple sources such as GitHub, Jira, Slack, and more, than surface insights and static patterns per person (PRs, Cycle-time).

With LLMs, we can extend these capabilities and move beyond static metrics. Go into the textual content itself and not the metadata, look into the meaning.

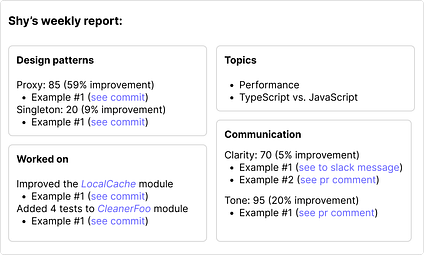

Imagine receiving a comprehensive report on your team member’s coding style, the number and quality of design patterns they used last week or an evaluation of the quality of inter-team communication, in terms of tone and directness. With this report, managers can see a better picture of potential improvements areas and follow-up quickly.

Caveats

-

People are complex creatures. While you might get a fantastic view of their visible work there is always a lot below the surface. Managers might start to overlook deep understanding and resort to basing their feedback system on the LLM reports.

-

Delivering the feedback is still the most delicate part. You can have all the right information but fail miserably to make a real behavior change. Even if this info will be available to the employee themself (and it should), most of us are not able to easily make the leap from knowing what are the issues to making the improvements.

The right person, on the right task, at the right time

A big part of the engineering manager’s job is the art of assigning work. Done right, this can be a great tool for driving and reinforcing growth. There’s a limit to how much conversation can impact behavioral changes, there are things that need to be learned by doing. If it’s learning a new technical skill, leading a project, or improving communication.

Now, imagine you could fine-tune an LLM model to assign work based on weekly reports and a description of a growth plan.

Inputs fed into the model:

Shy’s and Maya’s weekly report

Focus on improving Shy’s distribute systems skills, project leadership and direct communication.

Focus on improving Maya’s team work and DB indexing, give her a complex project which will let her unleash her technical skills.

GitHub’, Jira’s or Linear’s issues, bugs and tasks

Outputs:

Shy should work on project A.

Assign Shy to work together with Maya.

That’s pretty awesome, isn’t it? Managers can ensure every team member gets the growth path tailor-made for their needs and reinforce it with real production work.

Caveats

-

What about personal details? Life can’t be summarized into a few lines of the prompt. Our professional life is just a small portion of who we are and an LLM model might make wrong assumptions about who can work with whom or even prioritize some people over others.

-

Managers will need to verify the plans and the assignment. People are not machines and might resist even projects which on paper are perfect for their growth.

Performance reviews

I almost forgot performance reviews. These might become completely automated.

In the good scenario that might be a summary of all your weekly (or bi-weekly) 1:1s. Where you share feedback and follow up on personal progress. In the bad scenario, it will be an automated summary of the raw activity events as PRs, Commits, Messages, and Zoom transcripts. It will become easier to spot trends and behavior changes as improvements in code and communication quality.

Performance reviews might become just a ceremony instead of a true analysis (which many managers aren’t doing at all).

Caveats

-

The quality of the review can be as good as the events coming in. If the manager won’t follow continuous feedback and record every interaction they have, the end results might be inaccurate.

-

As with everything, once things become automated people will start finding ways to trick the system. It is possible for managers to create the wrong incentives if they blindly trust the curated performance conclusions. This is similar to how people can game the system in other areas, for example, Google ranking algorithms.

Looking forward

A more individualistic approach will emerge since people will be able to get good support from tools like copilot or GPT. This means that the manager’s role may shift into filling the lack of community and a human touch.

The ability to engage and fulfill your employees is already an important part of a manager’s responsibilities, but the individualistic approach will give these factors even greater significance. Managers must work hard to maintain healthy relationships and strong connections between team members.

Data quality and supervision of the inputs will become critical. AI models are well-known for biased results and the company that created the model may change it in the future. Managers will need to ensure that the data used to train the models is representative of the real-world.

A Reminder

Knowing your people means that you know your people, not the LLM model.