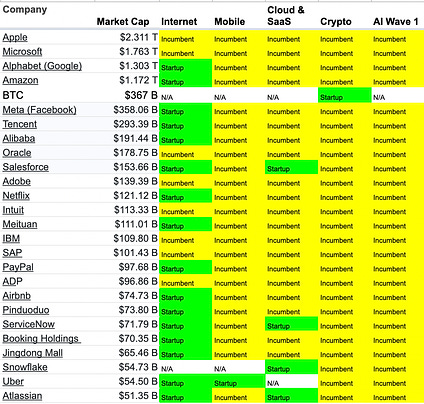

AI: Startup vs Incumbent Value

In each technology wave the value, revenue, market cap, profits and great people captured by startups versus incumbents differs. In some waves it all goes to startups, while in others it goes to incumbents or is split between them. Unexpectedly, the prior wave of value from AI roughly all went to incumbents over startups, despite a lot of startup activity. This post explores that dynamic and posits the current unsupervised learning wave of AI will contain strong startup success, in addition to incumbent value.

Some history

In the first internet wave most of the value went to startups (Google, Amazon, Paypal, Ebay, Salesforce, Facebook, Netflix) while some was captured by incumbents (Microsoft, Apple, IBM, Oracle, Adobe) who extended their franchises onto the internet. Perhaps this was a 60:40 or 70:30 startup:incumbent split.

For mobile, most of the value went to incumbents (Apple, Google, and then every mobile version of an incumbent’s app – e.g. “Mobile CRM” was not a stand alone startup but rather Salesforce on your iphone) while there will still significant capture by startups (Whatsapp, Uber, Doordash, Instagram, Instacart etc). Perhaps this was a 20:80 startup:incumbent split.

Crypto in contrast has been roughly 100% startup capture (Bitcoin, Ethereum, Coinbase, Binance, FTX, etc) with very little participation in value creation by existing financial services or infrastructure companies. Perhaps the biggest incumbent participants in crypto have been semiconductor companies like AMD or NVIDIA whose chips are sometimes used for token mining.

(Please note the term “startup” is meant to mean a new company started due to, or accelerated by, a specific wave. So while Apple was a disruptor in mobile versus incumbents like Nokia, it was not a brand new company started to make mobile devices. This is of course an imperfect definition.)

Why was so little startup value created by the prior AI wave?

Machine learning has been an odd one where the first wave of value (machine vision, RNNs, CNNs, early GANs, deep learning) went roughly entirely to incumbents.

While there were many “AI first” companies over the last decade (prior to the current transformer and unsupervised learning revolution) the really big AI applications landed with Google, Facebook (newsfeed and ads), Tiktok (Bytedance), Netflix (recommendations), Amazon (Alexa) etc.

Perhaps the biggest outcomes in the first wave of AI to date are the self-driving car companies, many of which are subsidiaries of the incumbents (Google, GM and Tesla, respectively), or were SPACd during the financial mania of the COVID era.

Outside of a few other one-offs of note, roughly the entire prior wave of AI-first companies have not done very well.

It is interesting to ask why there has been so little market capture by this first wave of AI startups. Some hypothesis:

1. Technology created a 0.5-3X better, versus 10X better set of products (?)

One hypothesis is that for some products the prior wave of AI helped create better products, but not so remarkably better that they could beat incumbents or hard market structures. To beat an incumbent as a startup you usually need to either build something so dramatically better that you overcome the distribution, capital, and pre-existing product moats of the incumbent, or you need to focus on a brand new customer segment or distribution moat the incumbent can not serve for some reason. In general you need a 10X better product. Maybe the last wave of AI in some cases was good, but not great, in terms of product improvements and this created insufficient differentiation?

2. Data differentiation used to be more important (?)

Many of the largest scale uses of AI to date have been at consumer-centric companies that have large data sets to train on (Google, Facebook, Uber, etc). Perhaps incumbents won due to a data advantage that is now going away as companies use the broader internet as an initial training set + are switching to models that work more robustly against smaller data sets? Maybe in the prior era of AI the data sets mattered more and it was harder to train a general purpose model a la GPT-3+ off the open web?

3. Hard markets (?)

Many (but not all) of the areas companies chose to compete in either had pre-existing incumbents that could “just add AI” or were in hard markets from a structural perspective. An incumbent can be 50% as good as something, but as long as they bundle it with a core pre-existing product with lots of customers they can still win (see e.g. Teams versus Slack). Many prior-wave AI companies either directly took on incumbents or worked in hard markets. Hard markets include things like education or healthcare, where technological innovation is often crushed by market structure, regulation, or a seeming indifference to actual end-user needs by people already in the field.

The Mycin project at Stanford in the 1970s was telling – programmers developed an expert system that could out perform the infectious disease medical staff at Stanford in predicting what someone was infected with – but it never got adopted despite superior performance. Some markets are hard, and even if adding machine learning makes something 10X better, it may not get adopted for other reasons.

4. Other (?)

There may be other reasons. Let me know what you think on HN or Twitter 🙂

Will this AI wave be different?

I have worked on AI-driven products for a long time. I worked on ads targeting at Google 15 years ago (in addition to kick starting many of the mobile efforts there) and then for a period worked on search product at Twitter (before taking on more operational intensive business areas). I co-founded Color which started off focusing on big-data, ML, and genomics (and has since morphed into a virtualized healthcare delivery company) and have also invested in AI related companies for 10+ years.

While many of the prior innovations in AI were striking and exciting (AlexNet, CNNs, RNNs, GANs etc) this time does feel different for a few reasons. There is reason to believe while incumbents should capture a good amount of the value in this wave, startups will take a bigger share of AI generated value this time around.

Differences include:

1. Better tech is coming across many areas.

One of the remarkable things about this current technology wave is the speed of innovation across many areas. Future GPT-like language models (GPT-4? GPT-N?) should increase the power, fidelity, and reach of natural language across consumer and B2B in deep ways and potential change everything from human interactions (dialogue based interactions?) to white collar work (co-pilot for anything that touches text, by vertical). In parallel, advances in image generation, speech to text, text to speech, music, video, and other areas are happening. One can imagine 4-5 clear business use cases from image-gen, from better versions of various design tools to storyboarding for movie making. Which of these uses cases are won by startups versus incumbents remains to be seen but one can guess for a subset based on the strength or nimbleness of existing incumbents.

This time, the technology seems dramatically stronger, which means it is easier to create 10X better products to overcome incumbent advantages. The “why now” may simply be a technology sea change.

The pivot point for whether now is the moment where AI takes off for startups depends on whether GPT-4 (or some other API platform) is dramatically more performant than GPT-3/3.5. GPT-3 seems to be useful but not “breakthrough” useful to the point where large numbers of startups are building big businesses on it yet. This could also just mean not enough time has passed since it launched recently. However, a 5-10X better model then GPT-3 should create a whole new startup ecosystem while also augmenting incumbent products. A 1.5 to 2X better version of GPT-3 may not be a big enough sea change to cause a true “why now” shift, although any incremental improvement is always positive.

2. New tech means there are startups providing valuable infrastructure to the rest of the industry.

Unlike the prior wave of AI startups, there are a clear set of infrastructure-centric companies with broad adoption and rapidly growing usage – this includes OpenAI, Stability.AI, Hugging Face, Weights and Biases, and others. While revenue is lagging usage for a subset of companies in this segment, it is ramping quickly in a manner not atypical for open source or API centric business models.

OpenAI is now the clear leader in LLM APIs – a position that 4 years ago Google was arguably in the default position to win. The failure of Google to capitalize on its many advantages specifically in AI has been striking. It feels like a Xerox Parc moment of inventing transformers, having all the talent, data, and distribution to build the seminal infrastructure for the industry, and then having a startup show up, Apple-like, to drive the industry forward[0].

Similarly, HuggingFace, Weights and Biases, and others are providing tools for the AI industry in ways that incumbent dev tools companies have failed to do to date.

3. There are clear app use cases without strong incumbents.

A number of the earliest use cases and startups – for example marketing copy (Copy.AI or Jasper), Image Gen (Midjourney, Stable Diffusion, etc.) and code gen (Github Copilot, Replit) are seeing nice adoption and growth in a way that did no exist in the prior AI wave.

In general, this wave of AI applications seems to do best in markets where:

-

There are highly repetitive, highly paid tasks (code, marketing copy, images for websites etc)

-

Imperfect fidelity is fine, as you have a human in the loop who wants to review the items (which creates a nice feedback loop or future training set). Human in the loop is not necessary, but seems to be a common feature to date.

-

Workflow tools do not exist or are weak for the use case, so the AI features become a core and useful part of a broader workflow tool

-

Summarization or generation of text or images is useful for the product application – this is enabled in a high fidelity way by new AI tech in a way that did not exist before.

So far, companies with these characteristics seem to be the sweet spot for this wave of ML. Other things like voice transcription, robots, video etc. all on their way as well which will broaden next-gen AI use cases.

Focus on end-used and markets

The key with all this exciting tech will be to avoid the hammer-looking-for-a-nail problem. It will be important to identify actual end user needs and unserved product/markets that will benefit from this wave of exciting technology.

As the builders in the market shift from research scientists to product-centric builds (including, of course, some product-minded research scientists) we should see a blossoming of new machine learning driven applications. This will likely be a 10-20 transformation similar to cloud which is itself still ongoing.

Scale matters

When thinking about startup versus incumbent value it is important to remember the scale of incumbents. For example, a 10% increase in Google’s market cap is currently $130 Billion, or the equivalent of almost 7 Figmas, 4 Snowflakes, 17 Githubs, or 130 Stability.AIs! The market caps of incumbents have gotten so large that even small changes can add up to entire ecosystems or market segments.

Given the likely coming impact of AI, one could imagine one or more truly massive startups being created. Even if incumbents capture most of the value this time due to raw scale, startups should participate in a significant way in new market cap and impact to the world. Certain market segments (e.g. search) might become vulnerable again for the first time. After having personally worked for 15 years on AI-related products directly, or investing in them, it feels like startups will finally start to get real value from AI. Exciting times lie ahead![1]

NOTES

[0] Xerox Parc famously invented the GUI, the mouse, etc and then demo’d it to Steve Jobs who launched it all with the Apple Mac. Google invented transformers and informed the world about it. OpenAI capitalized on this technology the best so far.

[1] I could of course be wrong on all this. If so expect Stability.AI and Hugging Face to be taken public via SPAC in the bubble of 2030 as the Fed drops rates and the government does massive inflationary money drops for the Great Panic of 2030 [2] and creates the mother of all bubbles.

[2] This panic will, of course, be due to either a global over reaction to something that isn’t really truly that bad (™), or an avoidable policy error that leads to either a giant energy crunch[3] or some mass escalation or geopolitical problem[4].

[3] Whoops. Maybe we shouldn’t have shut down so much global power generation (and the knock off effects on fertilizer, food prices, and prices for everything with energy as a cost input, which is roughly everything) due to the Stockholm-Paris-Seattle protests[5] of 2027?

[4] China-Taiwan? Other?

[5] “Largely peaceful” protests of course[7]. The good news is Seattle is now entirely a giant autonomous zone named SNAZY (Seattle North Autonomous Zone – Yes!), which is quite the snazzy acronym[6].

[6] This goes to show that alongside biologists and the DoD, anarchist activists also like acronyms.

[7] This is a lie. They were not peaceful, but were covered as such by the media for some reason.

MY BOOK

You can order the High Growth Handbook here. Or read it online for free.

OTHER POSTS

Markets:

Startup life

Co-Founders

Raising Money

Old Crypto Stuff: