Text Is the Universal Interface – Scale

“This is the Unix philosophy: write programs that do one thing and do it well. Write programs to work together. Write programs that handle text streams, because that is a universal interface.”

Computer software came to maturity in the late 1960s. While most programs to date had been written monolithically, mostly from scratch, uniquely built for building-sized mainframes, several pioneers were starting to standardize a new way of thinking. Some of the programs they wrote have familiar names (e.g. grep, diff) and live on to this day as a toolset for computer programmers handed down through the mists of time, their origins unclear but their utility unquestionable. Their continued success is a microcosm of the continued success of the UNIX philosophy, originated by the titans of computer software Doug McIlroy and Ken Thompson in the early 70s. They noted the power of composable tools that operate on and modify streams of text.

A text stream program comes with automatic ease of collaboration and organization: individuals can change the internals of different programs without worrying about breaking the work of others. After all, the interface is standard. Text stream programs also come with extensibility built in for free. When the rudimentary data format of I/O never changes, you can string together long sequences of nested shell invocations to build ornate software cathedrals just by wiring simple programs together that modify each other’s behavior. Text became the most common lever by which mankind would work computers to do their bidding.

Decades later, researchers began to conquer many previously unsolvable language and text tasks in unison. In February 2019, a precocious rising talent in AI research named Alec Radford came out with a seminal paper on language modeling that made him and his coauthors instant legends not just in the AI community but among a broad audience of technologists. Their patron institution, OpenAI, decided that the powerful model software created for the paper was the first AI plausibly too dangerous to simply release into the wild for fear of what malign actors might do with it. With the slow, eventual release of this model, GPT-2, they ushered in the age of what we might call large language models (LLMs).

In a previous iteration of the machine learning paradigm, researchers were obsessed with cleaning their datasets and ensuring that every data point seen by their models is pristine, gold-standard, and does not disturb the fragile learning process of billions of parameters finding their home in model space. Many began to realize that data scale trumps most other priorities in the deep learning world; utilizing general methods that allow models to scale in tandem with the complexity of the data is a superior approach. Now, in the era of LLMs, researchers tend to dump whole mountains of barely filtered, mostly unedited scrapes of the internet into the eager maw of a hungry model.

The resultant model displays alarming signs of general intelligence — it’s able to perform many sorts of tasks that can be represented as text! Because, for example, chess games are commonly serialized into a standard format describing the board history and included in web scrapes, it turns out large language models can play chess. Noticing these strange emergent properties, other researchers have pushed this general text processing faculty to the limits: a recent paper utilizes a large language model’s common sense reasoning ability to help a robot make decisions. They simply submit a list of potential moves as a text stream and acquire the approval or lack thereof of the AI. The text model knows, for example, that finding a sponge before washing the dishes is more reasonable than the other way around and commands the robot as such. Seeing these quite disparate tasks being tamed under one unlikely roof, we have to ask – what other difficult problems can simply be transcribed into text and asked to an oracular software intelligence? McIlroy must be smiling somewhere.

Several are venturing down this intellectual avenue, asking in the spirit of the UNIX pioneers if the general intelligence of large language models can become the core modular cognitive engine for their various software programs and businesses. These intrepid entrepreneurs and engineers who require that the world’s best artificial intelligences behave in a certain way to find value for their users are giving birth to the brand new discipline of prompt engineering. Dennis Xu, a founder of mem.ai, is one such frontiersman pounding down the doors of the future: his company works on a self-organizing note-taking app that works as an intelligent knowledge store, with an AI that curates, tags, and connects different notes.

Dennis’s team knows it doesn’t make any product sense to train their own model to solve their core cognitive task. Trying to understand the breadth of human knowledge from scratch in order to write note summaries and mark thematic tags would be an absurdity. Rather, why not use one of humanity’s most powerful robotic minds readily available to the public over an API? Where collecting one’s own dataset for a downstream application like a note-taking app will require a massive pre-existing user base, GPT-3 (codename: “davinci”), perhaps the world’s most celebrated language model, is out there and has been trained on half a trillion tokens encoding the breadth of common sense reasoning and language task understanding. We can simply ask it to perform tasks like “summarize this document,” “list the topics this note covers,” and others. Of course, it’s never so simple. We have to provide a few examples of notes and their corresponding summaries or topic tags to ensure the model is thinking in the right direction.The basics of the prompt generally consist of a call to action and a few examples that define the space of the task, but this barely scratches the surface.

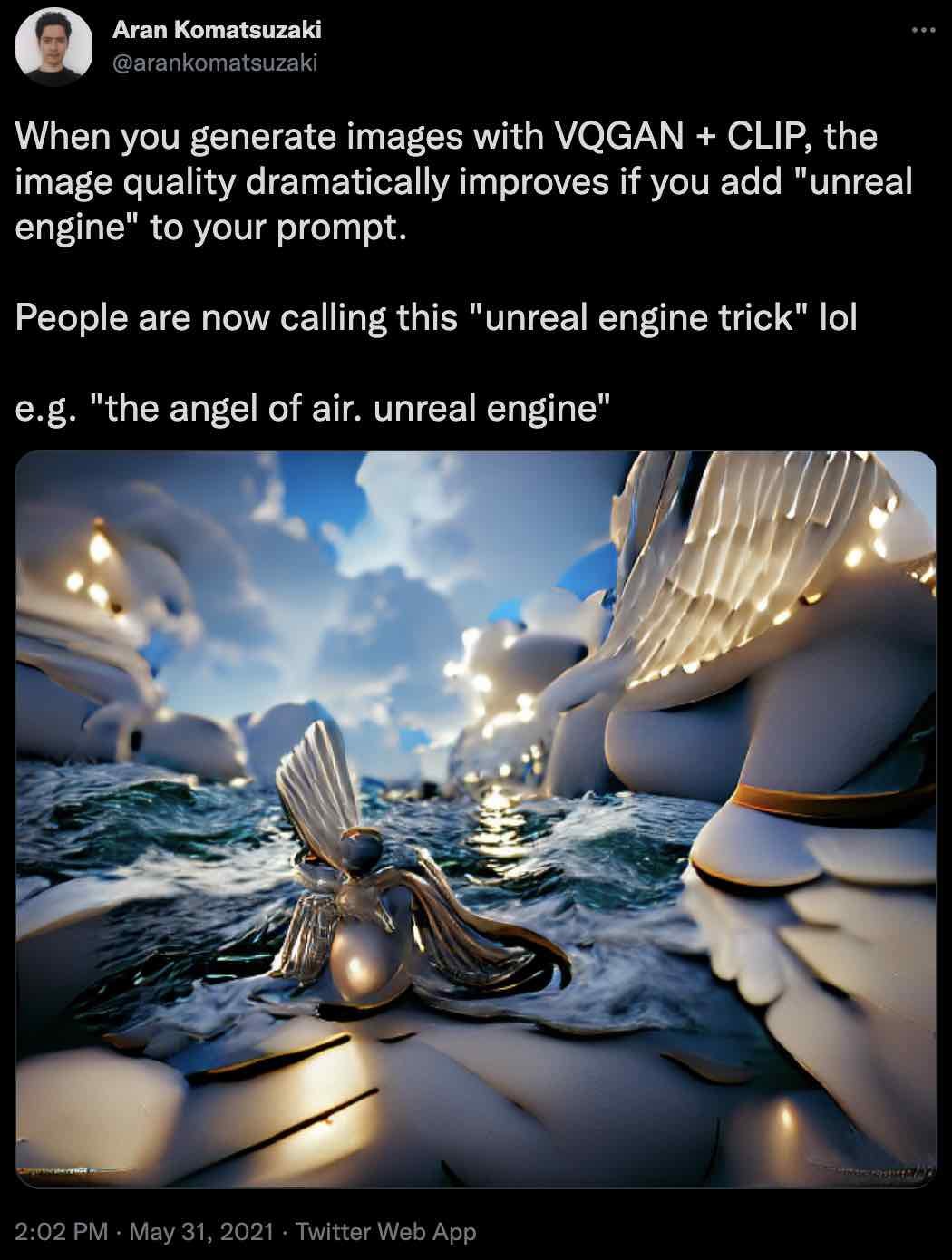

For anyone who works closely with these models, it becomes clear that the vast and comprehensive training gauntlet that creates these technological leviathans embeds some difficult behaviors. While the breadth of example material spawns broadly intelligent digital creatures capable of working on a vast range of text tasks, it can require significant prodding, cajoling, and pleading to get these models into the right mood for the particular task at hand. In the early days after the release of GPT-3, many noticed that the AI seems to become more cogent if you start the completion prompt with something along the lines of “this is a conversation between a human and an extremely intelligent artificial intelligence” versus not including the modifier. Sometimes, GPT seems to be hiding the true depth of its intelligence for the sake of the character it is playing at the moment. The prompt to GPT may even make the difference between being able to solve arithmetic puzzles or not. In another insane quirk of language modeling, text-to-image models seem to create higher quality art if you specify that it’s from the “unreal engine” game studio, known for its photorealistic style. A self-supervised language model can be thought of as a vast ensemble of many models that play many characters with different personalities due to the breadth of the document distribution and dizzying variety of writing styles seen in the half a trillion internet-scraped tokens that it studiously observes at training time.

Every prompt a user feeds to a large foundation model instantiates a new model, one more limited in scope: it enters into a different conditional probability distribution, or in other words, a different mood. A language model’s mood gives birth to a new piece of composable modular software that takes in a text token stream and leaves another as output. The elegant power of UNIX-style software comes from the simple but incredibly powerful primitive known as the pipe. It transfers the output of one program to become the input or modifier for another. The output of one prompted query, which asks for a summary of a note, can easily be aggregated and sent to another prompted model that asks to order the summaries by relevance for a given search topic.

The pure energy of modular software creation is buzzing in the prompt engineering war room, turning human will into repeatable programs with far reduced effort: find a prompt, don’t write a program. Dennis and his team work hard to find the right incantation to coax GPT-3 into the right mood to perform the task at hand. Like most engineering, it’s an ongoing optimization process. The structure of OpenAI’s pricing model demands that the end users have to take care to use as few tokens for the prompt as possible since that’s the basic unit of cost in the GPT-3 API. A prompt that uses only 100 characters to get GPT-3 doing the right thing is preferable to one that takes 400. Prompt engineers must play a delicate game of asking the model to think well while shortening their incantations.

These resourceful engineers are adapting some of the methods of the machine learning world to the prompt engineering world: an old bag of tricks under a new layer of abstraction. For example, they create tools to store “test sets” of difficult task examples to assess the quality of a new prompt (or an old prompt after a model change). Let’s say that a prompt engineer has an idea for shortening the text prompt that asks the machine for document summaries. It could potentially save thousands a month on API fees. She tries it on a gauntlet of twenty examples (“the test set”) that the team has concluded are interesting and duly illustrate the performance of the model. After checking that all twenty examples are producing successful results (or at least the performance is not worse than before), she can roll out her change in an automated way into the product. Using these methods to assure safety and speed up experimentation, the mem.ai team creates such innovations constantly. They may even cleverly compose several tasks in the same query (multi-task inference!), further decreasing costs. All input is text, so there are several obvious ways to recombine and reroute queries into different prompts. All output is text, so human reviewers can quickly examine a few test examples and check for correctness and performance.

It is easy to bet against new paradigms in their beginning stages: the Copernican heliocentric model of cosmology was originally less predictive of observed orbits than the intricate looping geocentric competitor. It is simple to play around with a large language model for a bit, watch it make some very discouraging errors, and throw in the towel on the LLM paradigm. But the inexorable scaling laws of deep learning models work in its favor. Language models become more intelligent like clockwork due to the tireless work of the brilliant AI researchers and engineers concentrated in a few Silicon Valley companies to make both the model and the dataset larger. OpenAI’s new model available in beta (codename: davinci2) is dramatically smarter than the old one unveiled just two years ago. Like a precocious child, a more intelligent model requires less prompting to do the same job better. Prompt engineers can do more with less effort over time. Soon, prompting may not look like “engineering” at all but a simple dialogue with the machine. We see that the gradient points in the right direction: prompting becomes easier, language models become smarter, and the new universal computing interface begins to look inevitable.

Sometimes, despite our best efforts, we must leave the realm of text. There are endless forms of computation that operate on other media — much of the digital content that exists is photos, videos, and graphical interfaces, and users adore all of it. We can already see that the power of natural language is being leveraged to ply and manipulate these as well. The rapidly advancing line of models called DALL-E and Stable Diffusion are proliferating on the internet, generating incredible social media buzz about the advent of AI art and what it means for humanity. These tools allow us to take snapshots of our imaginations, communicate them only through the versatile interface of text, and command powerful intelligences to reify that vision into pixels. This technology is remarkable — even the ancients conceived of a gift that could take a picture of the mind, and now it’s available to anyone that can type.

Others are pursuing even more audacious efforts to command other modalities under text. The team at Adept.AI, which includes the legendary authors of the seminal paper introducing the Transformer architecture used in all modern language models, notes that LLMs, for all their generalized intelligence, cannot take seamless action in the graphical interface world and are aiming to fix that discrepancy posthaste. An example listed on their blog: you tell the computer, in text, to “generate our monthly compliance report” and watch as your will unfolds on screen, as a computer mind converts text into action on an Excel or Word interface. In this paradigm, art, graphics, and GUIs themselves will be commanded under the modality of text.

Slowly but surely, we can see a new extension to the UNIX credo being born. Those who truly understand the promise of large language models, prompt engineering, and text as a universal interface are retraining themselves to think in a new way. They start with the question of how any new business process or engineering problem can be represented as a text stream. What is the input? What is the output? Which series of prompts do we have to run to get there? Perhaps we notice that the person in charge of the corporate Twitter account is painstakingly transforming GitHub changelogs into tweet threads every week. There’s a prompt somewhere that solves this business challenge and a language model mood corresponding to it. With a smart enough model and a good enough prompt, this may be true of every business challenge. Where textual output alone is truly not enough, we train a joint embedding model such as DALL-E that translates text input into other domains.

The most complicated reasoning programs in the world can be defined as a textual I/O stream to a leviathan living on some technology company’s servers. Engineers can work on improving the quality and cost of these programs. They can be modular, recombined, and, unlike typical UNIX shell programs, are able to recover from user errors. Like shell programs living on through the ages and becoming more powerful as underlying hardware gets better, prompted models become smarter and more on task as the underlying language model becomes smarter. It’s possible that in the near future all computer interfaces that require bespoke negotiations will pay a small tax to the gatekeeper of a large language model for the sheer leverage it gives an operator: a new bicycle for the mind. Even today, many of us find ourselves already reliant on tools like GitHub Copilot, despite their very recent invention, to read, suggest, and modify text for the creation of our more traditional software programs. Thousands or millions of well-crafted language model moods will proliferate, interconnected, each handling a small cognitive task in the tapestry of human civilization.

“The language model works with text. The language model remains the best interface I’ve ever used. It’s user-friendly, composable, and available everywhere. It’s easy to automate and easy to extend.”

Follow the author @tszzl on Twitter.