No Machine Learning in your product? Start here – The Lever – Medium

Advice from the trenches of machine learning integration

In the machine learning (ML) era, leveling up your Product Management team with some ML knowledge and skills is more vital than ever, and you might be surprised at how accessible the essentials are. And yet, those skills are all too rare. I’ve spent years in the trenches as a PM at Google and have distilled some of the learnings into a couple of blog posts for you.

Being a PM gives you a great vantage point from which to watch technology evolve. Personally witnessing Google gradually integrate ML in a majority of our product offering? What a view! I’m excited to share it with you, but first things first… what is a Google PM?

The notion of a PM as the product’s CEO, although imperfect, provides a good framework. PMs at Google are technically strong and commonly involved in engineering discussions and designs. Our PM ladder requires PMs at certain levels to “sign off on overall technical direction and architecture” or to be able to “describe, in detail, the full stack and interactions of the product sub-area on a technical level”. However, deep understanding in ML/AI often requires years of applying ML in research and practice. In an AI-first company, does that mean we have to send all of our PMs back to school?

I argue that PMs do NOT need to know the nitty-gritty details of ML. They don’t need to know exactly which algorithm to apply when, or how ML models are deployed to billions of users. Rather, they should rely on their software engineering (SWE) teams and focus on how they can contribute to ML-driven product definition and strategy.

In this post I aim to share some of the best practices on what product owners should know about ML, namely:

- The different levels at which ML drives your products

- An overview of tools in the ML toolbox

To set these best practices in a practical context, I also worked with the Google Forms team on a short case study focused on how they went about integrating their first ML feature into their product.

How much ML is in your product?

One of the main factors influencing how much a product manager needs to know about machine learning is how central ML is to a given product or feature. For simplicity, let’s consider three different levels of ML integration in a product:

- ML improves an existing feature (e.g. increased accuracy of a recommendation system)

- ML as an enabler for entirely new features (e.g. photo search by content rather than keyword)

- ML as an enabler for entirely new products (e.g. driverless cars)

Customers who bought this item also bought…

Level 1 integration: Supercharging an existing feature with ML.

Recommendation systems have been around for a while, but before ML, they relied on simple heuristics to recommend similar products. Co-occurrence of products in a shopping basket is one heuristic that has been traditionally used for recommending products without the use of any fancy algorithms. These types of recommendation systems are fairly simple to integrate. However, they also do a relatively poor job of generalizing to new products. ML algorithms have dramatically improved recommendation systems such as the well-known “next product to buy”. These systems are examples of ML making an improvement on an existing feature.

Let’s find pictures of cats…

Level 2 integration: Making a new feature that is powered by ML.

Just a few years ago, if you wanted to search your photos for a specific person or place, you would have to either remember when it was taken and search by the timestamp, or rely on manual tags that you painstakingly added to the images. Because the process was so tedious, photo search was a feature that effectively didn’t exist for the vast majority of users.

Facial recognition, object detection, and image classification have fundamentally changed the user experience of storing and searching for photos. These ML technologies enabled a set of entirely new features like searching for photos based on their content without having to manually tag them, or automatically grouping photos that contain the same person, scene, or location. Thus, the application of ML to photo storage effectively introduced new product features that couldn’t have existed before.

Let’s try to drive a car, without a driver…

Level 3 integration: Creating entirely new products using ML.

While I don’t believe we’ve seen many ML-enabled products (as opposed to features) yet, one of the closest examples we have on the horizon is the autonomous vehicle. To be honest, it is hard to come up with products that could not have existed before ML for the simple reason that they haven’t been conceived yet.

There will very likely be a class of products which are not extensions or improvements of existing products, but new categories all together. This type of product development involves a lot of fundamental technology problems (think of the decade-long time investment Google has already made into self-driving cars), and uses fundamentally different product management methodologies than improving existing features (see BrainQ case study for an example).

Understanding what tools are in the toolbox

As mentioned above, improving existing features is the most common way ML is applied today. Since feature improvements using ML are the most common, the user benefit/behavior is usually fairly well understood (e.g. how the product is currently used). There have also typically been other efforts to solve the particular customer/product need. At this level, the PM doesn’t necessarily need to know exactly how the ML algorithms work, but it is good to know what general types of ML solutions exist.

- Recommender/ranking systems are used to provide a ranked list of documents from a corpus. These “documents” can be anything from apps in the app store to movies on Netflix. The “corpus” simply refers to the full set of documents available.

- Event/action prediction refers to ML models that try to predict the likelihood of an event or user action. One of the most common applications of ML at Google is to predict a click (e.g. to show you videos that you are most likely to click on).

- Classification can help classify arbitrary objects into known classes. One example is categorizing emails as spam or not spam. Another is classifying a picture whether it contains a dog or not.

- Generative models are ML models that can generate output in a similar form to the input they were trained with. E.g. translation models take text in one language as an input and generate text in another language as an output.

- Clustering is a common form of unsupervised machine learning in which objects that are similar are “clustered” together, e.g. to segment users into groups.

Google Forms case study

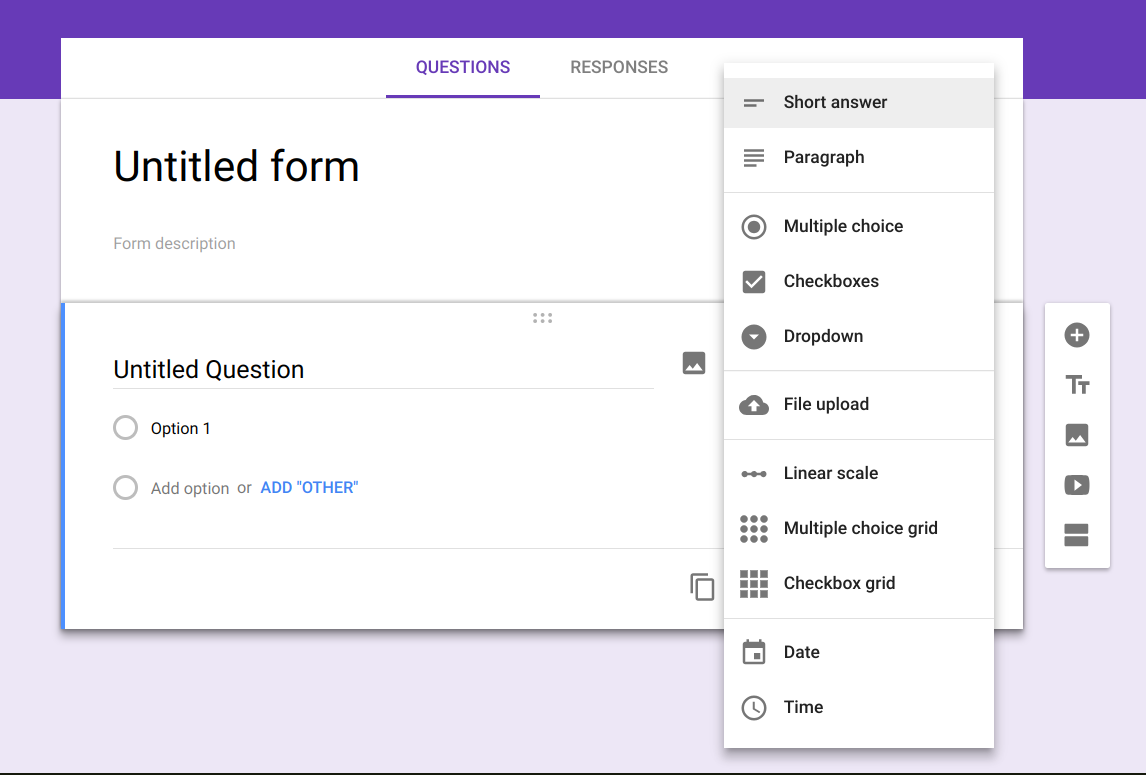

Let’s look at a real-world example of integrating an ML feature in an existing product which most readers are familiar with: Google Forms. In the past, creating a Google Form was a fairly static process from the user perspective. The user would name the form, add questions, and choose the question type (e.g. short answer, multiple choice, or checkboxes), with each of these steps being done manually.

One of the key roles of a PM (regardless of the presence of ML in the product) is to have a deep understanding of the users and their workflows, and to build a product roadmap which better serves the user’s needs. One area the Google Forms team identified for potential improvement was automating the selection of the “question type” — a feature which would reduce the cognitive load of using the product, and create a more delightful user experience.

What are we trying to maximize?

One of the most important questions a PM must ask during the process of scoping features is “what is this feature seeking to maximize/minimize?”

In the case of the autocomplete question type, “we were trying to reduce time to creating a form but more importantly, reducing the cognitive load for users to create a form. ML recommendations help users make decisions quickly.” This leads to a magical product experience that our users love to come back to.

When/how was the PM involved?

It is common for new product features to originate in either engineering (with a new technical capability) or from PMs (informed by an insight into non-intuitive customer behaviors). This specific feature within Forms happened to be driven by the engineering team at the early/experimental stage since the main challenge for this feature was technical as opposed to UX/customer oriented. We were reasonably sure that, if applied using good product management practice, there was low risk of creating a bad user experience.

There were several areas in which the PM was most involved in the deployment of this feature:

- Brainstorming what signals to use to train the model.

- Figuring out how to best surface the experience (e.g. provide a suggested question type vs. auto-apply a suggestion).

- Helping the team analyze outcomes of different models.

- Planning and managing launch strategy (e.g. can we launch internally or to a subset of users and see if the user’s have a pleasant experience?).

PM strategy for a smooth ML feature rollout

Since ML-powered features are built using a varied corpus of data, and almost no new real-world example is identical to one that the model was trained on, one potential shortcoming of any ML-powered feature is occasionally making nonsensical recommendations or predictions. From a PM perspective, several best practices can be implemented to make it easy to ignore a suggestion or correct an automated action. For the Forms example, we did the following:

- Ensure that the model yields good results on test data.

- Ensure that the feature only triggers when the model’s confidence in its prediction is high.

- Ensure that future actions are accounted for (e.g. if a user explicitly changes a question type after we apply the suggestion).

Results

The result of this process was the successful deployment of an “autocomplete” feature within forms. Now, Google Forms predicts the “question type” (e.g. short answer, multiple choice, or checkboxes) based on the text the user types into the “question” field, as opposed to having the user manually select the question type.

For example, the “question type” automatically switches to “Checkboxes” when the user types “which kinds of food do you like?”, since the question implies selecting more than one option. If the user enters “How happy are you with this product?”, the question type automatically switches to a 1 to 5 linear scale.

In summary, since commercially-deployed ML is still in its adolescence, most systems today fall into the Level 1 (“incremental”) category: taking an existing feature, and improving it through the application of ML. For this level of integration, most PM’s should be able to own the entire process regardless of their expertise with ML, by working closely with their engineering team. The core skills of being a PM for a level 1 ML integration are foundational building blocks to deploying ML-driven features and products (levels 2 and 3) of the future, a skill set which will only continue to be more important as the technology and infrastructure matures.

Good luck and keep an eye out for my next posts! In the meantime I recommend learning more about how ML is deployed in other products by searching for case studies online.

Clemens Mewald is a Product Lead on the Machine Learning X and TensorFlow X teams at Google. He is passionate about making Machine Learning available to everyone. He is also a Google Developers Launchpad mentor.