What is Ambient Computing? – Narain Jashanmal – Medium

Perhaps it’s easiest to start by saying what it isn’t. It’s not simply another name for the Internet of Things (IOT) — though there are aspects of IOT that embody Ambient Computing.

Nor is it a revolution. Rather, it is guided evolution — making conscious choices about the direction in which we want to see computing go. The definition of computing that we’re working with here being a broad one that encompasses hardware, software, user experience and human machine interaction.

Evolution implies that it builds on what has come before it, with an emphasis on familiarity and inclusiveness. Designer Luke Wroblewski focuses much of his design analysis around usability on this, which can be found on Twitter and his site — here’s an example in which he explores the evolution of voice to text input for messaging on Apple Watch:

The Ten Characteristics of Ambient Computing

- Invisible

- Embedded

- Familiar

- Discreet & Discrete

- Distibuted

- Modular

- Symbiotic

- Personal

- Inclusive

- Conservative

1 — Invisible

Historically, new technology has called attention to itself. Thanks to miniaturization, as well as the phenomenon of software eating the world and thus becoming at least equal to hardware in importance, this no longer has to be the case. Some of the most significant technological advancements have been nearly invisible, while still happening right before our eyes. The emphasis is on the experience delivered to the end user, not on the mechanics of what it takes to deliver that experience. Examples include:

- At an infrastructure level data-centers are physically massive, but invisible to the vast majority of consumers.

- The shift from cameras being specialized devices owned by few people to being a commoditized, general purpose input mechanism on smartphones:

- Touchscreens (which are themselves transparent, if not actually invisible) have become ubiquitous. Growth can be measured by the amount of glass being manufactured for touchscreens.

- Continual advances in Central Processing Units (CPU) design, architecture and manufacture as well as the rise of the Graphics Processing Unit (GPU), especially in the field of Machine Learning — for GPUs, this was fueled by consumer applications such as Gaming, which led to a critical mass in demand, in turn making it viable for NVIDIA, AMD and others to invest in research & development that advanced the state of the art.

- Every type of data imaginable is housed in databases and made available, either via dedicated User Interfaces or Application Programming Interfaces (APIs) — an example of the former being what the National Basketball Association has built using SAP HANA:

2 — Embedded

Technology has found its way into even the most common place objects, if not always in their final application, but certainly upstream, during their design and manufacturing process.

Whether part of the upstream process or contained within the product this implies that technology is an inherent part of the product, whether it be a stationary device such as a Nest thermostat or the highly portable Apple Watch.

Embedding technology within such familiar objects to the extent that it enhances, simplifies, automates or brings entirely new functionality to existing form factors takes advantage of people’s existing comfort levels while making use cases clear.

3 — Familiar

Furthermore, it implies that the control for such technology should be obvious to even the most lay-user. Ideally, the controls should be so simple that nine out of ten people choose to set it and forget it.

Simplicity, however, shouldn’t obscure utility.

4— Discreet & Discrete

Technology should be there when you need it and out of sight when you don’t.

The emphasis on making the technological aspects of products disappear could lead to the manufacture of products that are single purpose in nature, but that co-exist in a broader ecosystem.

Adding sensors to products that previously didn’t have them doesn’t mean that the functionality that those sensors enable needs to be front and center, in some cases there are benefits to displays or controls that are externally analog even if they map to digital functions.

5 — Distributed

Given smartphones’ ubiquity, the temptation of device makers to use them as the unifying layer between disparate devices makes sense. Common standards such as Bluetooth and WiFi, each with their unique set of limitations, has further enabled this.

There’s merit to this approach, but there are also limitations — the hurdles that smart lock manufacturers have been grappling with are an example.

If a technology is distributed, it is worth exploring the use-cases for it as something that is both self-contained and connected.

6 — Modular

But, if that’s the case, then how can they function together as a whole? What is the connective tissue? Here is where this theory deviates from the applications that we’ve seen so far, whether it be in the IOT space, with multiple, competing standards, or relying on smartphones and their Operating Systems to be that unifying layer.

Ideally, people should be that layer.

7— Symbiotic

There’s an extensive amount of thought and writing currently about the ill-effects of technology on both the individual and society. While it is generally regarded that the benefits outweigh the costs, especially over the long term, there is an aspect to the technology that is prevalent in our lives today that could be considered to be parasitic — that it takes more from us in time and attention than it gives back to us in utility and delight.

Products that follow the ethos of Ambient Computing seek to invert this equation by putting people at their center.

A significant focus of interaction design has been around removing friction from various processes, such as one-click checkout and there’s much value to this work. However, it raises questions about what removing friction in some contexts means. Does it encourage thoughtlessness? Is there a countervailing argument in favor of adding friction to promote intentionality?

8— Personal

One area where the value of removing friction is clear, if not unambiguously so, is around personalization: storing personal preferences, the use of background location, data mining to create cohorts based on affinity and similarity.

People clearly value the benefits but a vocal minority are concerned around the costs attached and the lack of portability and interoperability between various systems in which user data is housed.

Apple has been applauded for their approach, known as differential privacy, where Machine Learning is done both at a server level using generic data sets and at a device level, where personalization takes place.

9 — Inclusive

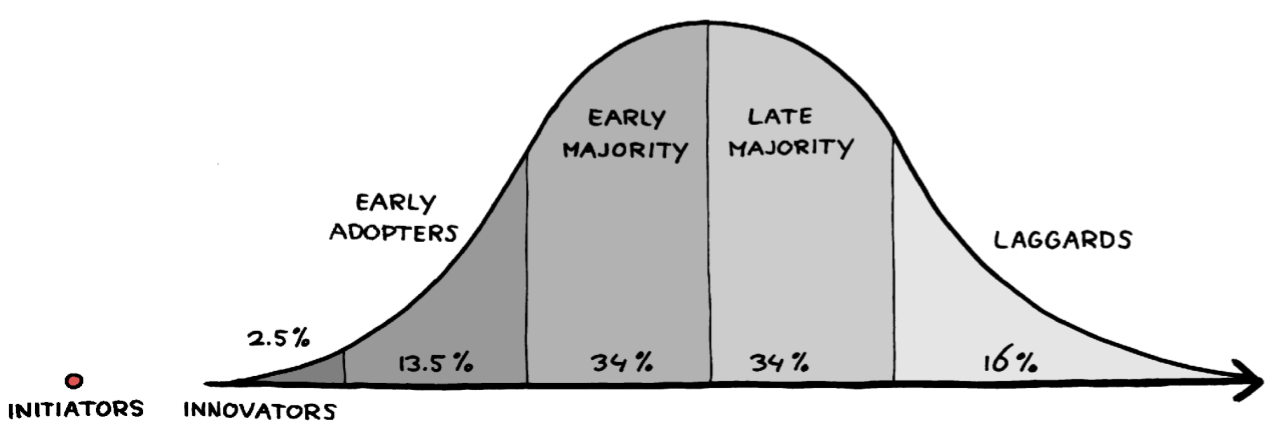

There’s been a tendency for technology, especially newly released products, to be built for a limited audience who can understand it, learn its language or afford it. Partially, this has to do with the adoption curve that we’ve seen various technologies take over the course of their life-cycle (known as the theory of Diffusion of Innovations).

However, specific products can be introduced at different points along the life-cycle of a given technology and those that embody Ambient Computing aim for a stage beyond which critical mass has been achieved and thus rely on platforms that are broadly adopted.

An example here is Messaging — SMS, Apple iMessage, Facebook Messenger, WhatsApp and WeChat are all apps or services with at least high hundreds of millions of active users. Therefore, it can be assumed that people are broadly familiar with the basic mechanics of how these services operate and with a growing set of developer and business tools being released, they are becoming increasingly robust platforms in their own rights.

10 — Conservative

Embrace constraints. While there is an aspect to innovation that seeks to push technology to its bleeding edge, there’s an argument to be made that operating within a given set of constraints, whether self-imposed or governed by prevailing technology, can lead to more rapid adoption. These seemingly competing aspects can co-exist in the same device, with trade-offs made across different dimensions.

The original iPhone offers an example, while a capacitive touchscreen that enable pinch-to-zoom and inertial scrolling were novel and best-in-class, the 2 megapixel camera and Edge connectivity were pedestrian. However, the former engendered consumer delight and spurred demand, while the latter helped minimize impact on a battery that already took up most of the space within the device, making it more usable.

The limited capabilities of the camera gave rise to apps such as Hipstamatic and Instagram, that embraced those limitations until, eventually, mobile photography came into its own, delivering quality far beyond that of cameras that mass market consumers had previously enjoyed.

In the context of Ambient Computing, operating in harsh conditions, or with limited battery life, utilizing existing screens rather than slapping another screen on something offer guidance about how to problem solve.

Conclusion

Not every product will exhibit all of these characteristics. Therefore, they should be seen as a framework, rather than a rigid template.

The intention of this post is to serve as a working and evolving definition of the concept, as well as to kick off discussion and exploration.

To get involved, feel free to comment here and visit www.ambient-computing.io