JavaScript World Domination — Medium

The JavaScript

World Domination

From browsers to mobile phones, from tablets to tabletops, from industrial automation to the tiniest microcontrollers — JavaScript seems to creep into the most unexpected places these days. It’s not too long until your very toaster will be running JavaScript… but why?

How exactly did we end up here?

In recent months I have been frequently asked to talk about some next-level JavaScript (mostly ES6 and the JavaScript-of-things) & DOM technologies (i.e. Service Workers) on meetups and conferences in Hungary.

At one of those meetups I had a chance to present on “JavaScript’s World Domination”. In retrospect, five minutes of stage time was bound to be a bit slim to cover a both some of JavaScript’s history, while also touching on the incredibly vast and diverse potential just waiting to explode onto the JS-biosphere — yet that was the basic idea there.

Anyway, to stick to the point, after the talk someone in the audience asked me the question:

“Why hadn’t you mentioned Netscape’s original server-side JavaScript solution — but instead pointed out node.js as “How it all started”?

Well (apart from the obvious time constraint involved) this was a fair question — even though I think it was based on the wrong assumption of what “How it all started” referred to. I thought this might have deserved a bit more elaborate answer than the one given, which was something like:

“While node wasn’t the first server-side JavaScript solution, it certainly was a turning point with regard to how JavaScript’s used today”.

Server-side JavaScript in itself is a topic that would be troublesome to fit in its own five-minute talk — but here, what I meant is that with node.js a new era has begun for JavaScript — and this new era was about so much more than just server-side JavaScript! But well, let’s not get ahead of ourselves…

Accidental JavaScript

When someone starts off to learn the ropes of the ECMAScript standard, they are bound to learn a lot — not just about the future of JavaScript, but (in order to fully understand the editors’ motivations and constraints) its history, too. This is exactly what happened to me, as I have slowly pieced together the fragments of the JavaScript lore, it became apparent that this was a language with a lot of “baggage” — one with quite a few skeletons in various closets to uncover.

JavaScript is a kludge — most of you reading this will be well aware of its anecdotal hasty inception, by its creator Brendan Eich. However (and this is important) this isn’t necessarily a bad thing!

JavaScript is a kludge in a way our very brains are — in its advances being more evolutionary than revolutionary, hogged by all the cruft accumulated during its early days that it just could not afford to dispose of — but in the meantime, thriving both in numbers and in finicky smart additions that conceal its ancient aspects.

Before we go on a trip down memory lane to start our Civilization-esque journey of JS-evolution, here is a bit of glossary of JavaScript-monikers and early history:

JavaScript is the name you are most likely familiar with — the name that stuck, but which is basically a marketing-stunt to ride Java’s fame, as early on Netscape’s browser-scripting language it refers to was called LiveScript. Heck, if you’ve spent enough time around Brendan Eich, you may have even heard how they wanted to call it “Mocha”.

Netscape, of course, couldn’t get away with being the sole browser offering in-browser client-side scripting — Microsoft’s Internet Explorer sported its very own interpreted script language, called JScript. JavaScript and JScript were similar, but not the same: the compatibility issues that followed started to plague the lives of contemporary “web designers”, so Netscape, Microsoft and the European Computer Manufacturers Association (now Ecma International) went ahead to unify the grammar and standardize browser scripting under the name — you guessed it — ECMAScript.

Several languages popped up in the following years based on the ECMAScript standard — probably the most widely-known being flash’s ActionScript, which tried to cater for the original language’s shortcomings, but despite the effort it failed to pass on its extended features into the mainstream. Other than that, quite a few languages extended JavaScript in various ways (like CoffeeScript or TypeScript) since then, while the language itself evolved, incorporating the best ideas into the core. ECMA (more specifically, TC39) has released several editions of the base standard since then — ECMAScript 5.1 being the most recent (stable) one, while ECMAScript 2015 — or as it was previously known Harmony, ES.Next or simply the 6th edition (ES6) — is in the Release Candidate 3 phase and is slated for approval by the ECMA general assembly in June.

Occupying the browser

With this post I am not in the market of selling ES6’s advances — there are a lot of great sources to find out more about the upcoming cool features and extensions — however I think we could agree on the fact that JavaScript has pretty much dominated the browser market. There aren’t a whole lot of client-side languages in browsers today, and that’s for a reason: VBScript was dropped in IE11 and Google is still trying to shoehorn Dart into the web (well, that mostly means Chrome) — with not much success.

What remains then? Not much, basically (quite) a few compile-to-js & JavaScript-superset languages, but at the end of the day, even these are just pushing JavaScript’s agenda forward. Heck, JavaScript even compiles to itself — with tools being able to transpile the new functionality in ES6 into previous versions of the standard for backward compatibility.

Suddenly — a wild JavaScript appears

As JavaScript was becoming more and more ubiquitous as it was accumulating developer mindshare, it started popping up in weird and unexpected places: initially, this was just the corners of the web previously untouched by the JS-pandemic, but soon after that, JavaScript was all over the place. There were essentially two (more or less) well-known laws in IT that contributed to, even foretold JavaScript’s world domination.

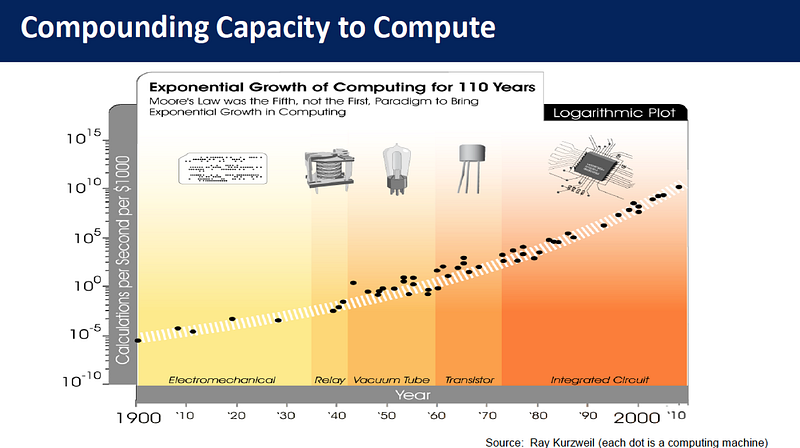

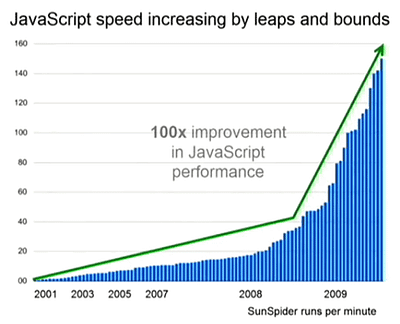

Moore’s Law

I doubt computing’s most essential law needs any explaining to anyone who got this far in reading this article: Moore’s law (oversimplified) states that hardware complexity (and thus, speed) increases in an accelerating fashion. So far this has been generalized in various ways (i.e.: how it really is about “exponential progress in the wake of tiny revolutions & paradigm shifts”), but we are interested in its rawest aspect: explosive growth of brute computing power in an ever-shrinking package.

What these seemingly unstoppable technological advances made possible: they made performance less of a sensitive aspect when developing applications. In a software ecosystem, performance is just one aspect — developer mindshare (programmers familiar with the language & environment), available tools and libraries, openness & onboarding cost are all very real constraints one has to deal with. Back in the days where megahertz and kilobytes were of scarce serving JavaScript’s suboptimal nature was a much bigger hindrance than in today’s amply-powered cheap devices.

Moore’s law was what made the mobile web possible — while in the meantime JavaScript taking a stab on the server-side, desktop & mobile operating systems, eventually seeping into the tiniest microcontrollers.

Serve your script, and eat it too

As mentioned above, node.js wasn’t the first time JavaScript has wandered server-side — Netscape has offered server-side JavaScript execution in its Enterprise Server as early as in 1994, right after the language’s inception.

But it didn’t stick.

Why? Well mostly because it was a serious pain in the bum using it. For one thing — it wasn’t live at at all, the application had to be compiled after the slightest modification.

Of course, just because it didn’t work out for Netscape, that doesn’t necessarily mean server-side JavaScript was a lost cause, so various solutions to the same problem kept popping up.

Helma & Narwhal were one of those projects. Helma — now abandoned (or rather, spun out into Ringo.js) — was running Mozilla’s Java-based JS-engine, Rhino, while Narwhal theoretically supported several different JS-backends. Both of those frameworks found their respective niches — but neither of those found widespread popularity — that could be chalked up for several of their shortcomings, but before we get there, there is one more thing we need to mention: the DOM.

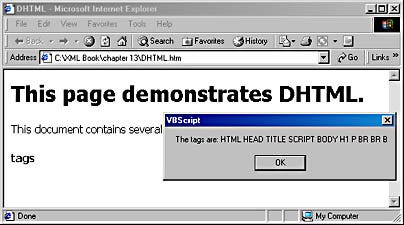

DOMinating the JavaScript World

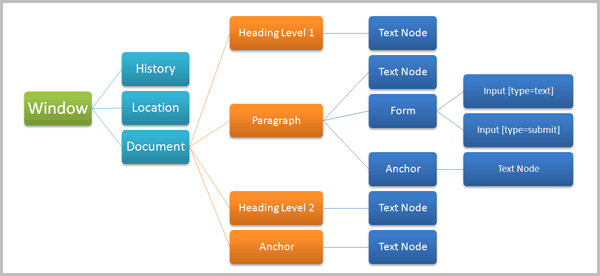

When Microsoft and Netscape released their respective scripting-enabled browser offerings, they started standardizing the programming languages — which effort was to become ECMAScript later. However, web browsers (even back then) were not just empty shells, nice facades for a script console. Web browsers comprised a full environment (an operating system of their own, so to speak), of which JavaScript(/JScript) was just a tiny fragment — every time one needed to interface with some of the browser’s features (like the HTML markup of the document itself, or the styling of the nodes) one needed an API for that.

Both Netscape Navigator and Internet Explorer went on to introduce their own (of course, incompatible) APIs for accessing the document object model, which eventually went on to be standardized separately and became the DOM standard. The DOM is supposed to supply the bindings — both language and platform-independent — to the features exposed in a browser (or more broadly, by the environment), and has evolved just as ECMAScript evolved, in standards released by W3C in tandem.

Eventually, besides the DOM several other features and extensions emerged, some of which went on to be standardized and accepted cross-browser, some of which remained confined to a single browser vendor or environment. Such efforts are continuously being worked on as of today (one particular cooperative effort being the Service Worker specification), and are even encouraged, as a means of extending the web and keeping it up to speed with technological advancements.

Why all the above is relevant? Well, for one thing the two are confused quite often so it didn’t hurt to get that misunderstanding out of the way — but more importantly, is because while all client-side JavaScript solutions at this point had some standardized APIs for accessing their environment, all the above server-side solutions were vastly incompatible in their ways (i.e. accessing a file’s contents on the server). There were attempts trying to weld the two technologies together before: one such unlikely hybrid was Jaxer, running a fairly complete Firefox instance, complete with browser DOM on the server-side. However, even Jaxer had its own way of dealing with lots of the environmental factors, so for really useful outcomes of this hybridization, we still had to wait for node.js to arrive.

Enter node.js

Whoah, I have sure preached quite extensively about node up to this point (not that I have any particular interest in selling it to anyone), but hopefully I have managed to give a fair preface to:

Why was node.js a big deal?

It just happened to be

in the right time, at the right place.

The browser wars were raging, JavaScript execution speed was tenfold ahead of anything anyone had ever seen before — and due to the fierce competition, was still steadily increasing… and yet,

Ryan Dahl, the creator of node.js wasn’t even trying to shoehorn JavaScript into a server environment.

Au contraire, admittedly he just stumbled upon the mighty V8 engine while researching event-driven server techniques — JavaScript just happened to be a suitable fit to evented, non-blocking I/O, and a single-threaded event-loop based environment.

A-synchronicity

Even though the idea of asynchronous, non-blocking/evented I/O wasn’t new at all, event-driven web servers were not all that abundant. Event-driven server origins go as far back as 1999, the nascent Flash (no, not that flash) server used this technique — but other server-side solutions using the same paradigm are on quite short order. In fact, (and please correct me if I’m wrong), node.js actually predates the single other relevant solution I could find, the Tornado web server (written in Python) by at least half a year.

Pointed out by Alexandre in the comments — the Nginx server was also event-driven (which actually is mentioned in Ryan’s original presentation, pitching it against Apache’s threaded system), however I would argue that it is much less powerful/flexible for this to count as fair comparison.

Also pointed out by several readers, the Twisted Framework (written also in Python) was an event-driven async networking framework, which well predates node’s release. One could argue whether Twisted fits the argument above or not, but certainly is a valid point — thanks for pitching in!

(Twisted also has extensive interoperability with above mentioned Tornado web server which I think better fits node, but that’s simply a personal opinion.)

Gathering a Common-ity

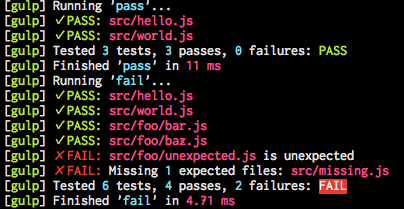

Node was the first server-side JavaScript solution to gather a sizable community (on several different levels). Releasing it as open-source software, contributions flowed in (arguably not at a pace that was satisfactory for some, but well, it was a start), adopting CommonJS as the interoperability platform in node (and thanks to npm), the developer ecosystem thrived (Narwhal/RingoJS both utilized the CommonJS standard — but neither could pull off anything like the advent of node.js and npm spurred).

The arrival of IO.js in early 2015 has further extended and revitalized the community around node.

Contributions soared sky-high as the open-governance model selected by the fork’s authors started to do its magic:

in a few months, the number of active contributors has overtaken that of the early node.js days, and localization teams took the community to new frontiers.

“Okay, okay — node.js breathed new life into server-side programming, it is our savior, a fearless knight in shining armor, yadda-yadda — I get it, sheesh…” — well yes, but it didn’t even stop there!

Extending the chain of command

Node also made it trivial to embed JavaScript in a cross-platform way into… well practically anything. It all started with the command line, and at a blink of an eye JavaScript based scripting and tooling was zapped into the world.

Easy to use, extensible, and available for all relevant platforms Grunt, Gulp & co. extended the tooling around not just JavaScript and the web, but any conceivable platform.

(Did you know even Photoshop had its own scriptable node.js instance built in?)

Also, what Jaxer couldn’t pull off (an embedded browser with DOM), it came almost naturally to node — first via the help of PhantomJS, and not long after that with JSDOM adding JavaScript-native first-class support for accessing the DOM in node.js — automated client testing for web content was reinvented in a familiar package (check out Domenic Denicola’s superb talk on JSDOM and its motivations!). Guess what, even that wasn’t enough, and after conquering the browser, the server and the command line — node went for an even bigger fish in the fishbowl: desktop apps.

A NW era

Thanks to the speedups of JavaScript and advances in HTML5/CSS web technology, HTML5 apps are all the rage — and most of the time they run at ample speed on a common low-to-moderately specced device. As node.js brought a myriad of API-s to access low-level (such as filesystem and networking) operating system primitives — it was only trivial to expose these primitives to a web rendering context instance — since both Chromium and node.js used V8 as their JavaScript engine, fusing those two together was a no-brainer: this was how node-webkit (now named NW.js) was born.

Node-webkit spurred a whole new class of desktop projects into existence, and after Cloud9 IDE successfully wedded node.js and web technologies in what became a state-of-the-art Integrated Developer Environment, projects like Brackets and ATOM brought the experience to the desktop (while keeping best of both worlds — cross-platform interoperability, extensibility — intact).

Harder, Better, Faster, Stronger

While JavaScript was becoming more and more sophisticated and powerful thanks to the work of TC39 on the ECMAScript standard, and ever- increasingly omnipresent (reaching farther than ever thanks to node.js et al.), its evolution didn’t seem to stop, or even slow down.

JavaScript was bound to become faster while devices it was powering kept shrinking smaller and smaller.

Okay, JavaScript — sooo…

Browsers? Done. Servers? Easy! Mobile? Devoured! Desktop? Done & dusted! Anything else you might want to try?

Well what about IoT?

The JavaScript of Things

Well, this idea of the “internet-of-things” is quite popular nowadays, one might say. Smart watches, smart lighting, smart heating, smart houses; internet-connected fridges and washing machines and electric kettles — you name it.

But these are small, limited power devices, tiny chips with tiny memories, also with tiny power supplies — such a resource-hog, power-hungry language like JavaScript shouldn’t be driving these, no?

Well, think again! An era of the JavaScript of things is closer than you might imagine.

A fiery fox to the rescue

A few years ago no one would have thought a low-power, cost-conscious mobile device based on web technologies would be even possible — and yet Firefox OS has proven that it was not simply possible, but very much feasible by releasing ~15 different Firefox OS-powered devices in about ~30 countries in the last two years.

All these devices are full-blown internet-capable touchscreen smartphones — selling for as low as ~$33 USD. These, and the other types of gadgetry (like Panasonic’s FirefoxOS-powered TVs) are the proof that JavaScript and web technology are still rather far from hitting their limits.

Actually it is so far from said limits, that Samsung decided on using it on its smartwatch platform, based on the Tizen operating system — while at the same time, approaching Ecma International’s TC39 with the idea of standardizing a mobile-conscious, even more prudent subset of the language to be used on the smallest scales of devices.

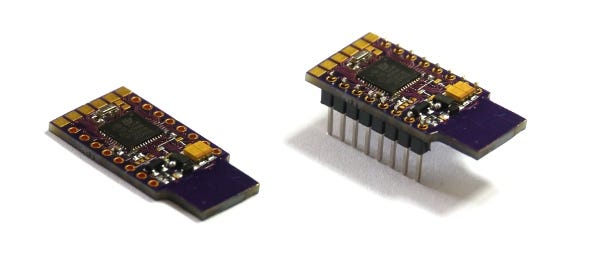

While we don’t yet know what has come out of Samsung’s proposal — embedded JavaScript is a very lively topic of its own. Samsung’s original TC39 pitch references Technical Machine’s Tessel and the Espruino, two microcontroller platforms built around JS.

Before we dive into the details of creating a JavaScript solution for such highly resource-constrained hardware, I would like to introduce the second law I wanted to share with you:

“Any application that can be written in JavaScript, will eventually be written in JavaScript.”

— Atwood’s Law by Jeff Atwood

I won’t try to over-explain the law above (it’s pretty self-explanatory, anyway), but I will try to show it in the works in the remaining part of this article.

Minuscule Scripts

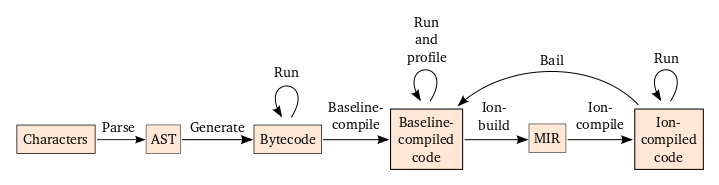

JavaScript at its core is an interpreted language. This means that it is run by evaluating its source code just before executing it. Of course, various optimizations could be perceived to ease and speed up JavaScript execution — one of the most basic (and also very powerful) ones is parsing the source and building an AST, an Abstract Syntax Tree. An AST is a representation of the source code that’s much easily traversed by the interpreted. Source code is still not compiled to machine code in advance, but executing on the fly, thus avoiding the overhead of parsing (and re-parsing) and tokenizing the source code. This is an obvious gain without many drawbacks — that is exactly why nearly all JavaScript engines use this method. There are even some tokenizers written in JavaScript itself (like Esprima) that could be used to view and even fiddle with this intermediary state. Esprima’s AST output could be used for several interesting use cases like transpiling and code-completion (a lot of node-based tools exist for JavaScript pre-processing and code transformation that are based on Esprima).

There is one small problem with this approach. Besides the initial processing requirement, memory space is required to store the AST so that later it could be executed. For most of the applications this should not be a limiting factor, but when we get down to as low as the sub-megabyte memory capacities of microcontrollers, this becomes a one very real restriction.

The Espruino

The issue above was exactly what Gordon Williams, creator of the original Espruino faced — a tiny hackable IC consisting of a single microcontroller and 64 kilobytes(!) of memory, but still executing JavaScript. Compiling or parsing to AST form was not an option on such a low-memory device (some of the standard’s requirements have to have been glossed over, like Unicode-support because of the limited memory capacity). For that reason, code on the Espruino runs from the source itself, parsed and executed on the fly! This, of course, comes with quite a few peculiarities that should be taken into account — like white-space affecting the speed at which the code runs on the Espruino!*

The upcoming new iteration of the Espruino, the Espruino Pico will have a tiny bit more memory at its disposal (96KB), but even that is not that much for this issue to be adequately solved. For these reasons (code size & compatibility) Espruino boards might be programmed via the familiar JavaScript language, execution remains dynamic (=interpreted on the fly), but blind spots in the engine’s standards-compliance make it unable to take advantage of a sizable part of the rich ecosystem that the language offered. Espruino’s strengths, however lie in its affordability and its potential in lowering the disconnect between hardware and software (further lowering the barrier to entry into hardware-hacking with tools like its Web IDE).

The original Tessel

For some of these reasons stated above the great folks developing Tessel at Technical Machine chose a different approach. The Tessel had ample RAM (32MB) at the disposal of the JavaScript interpreter — however the Cortex M3 microcontroller that drives the whole machinery has about 200KB of memory, which makes it quite difficult to run any high-level JavaScript interpreter on it.

There was, however, one language that was begging to be embedded from the very beginnings: Lua. Lua itself is a programming language that closely resembles JavaScript, so writing a compiler that translated the JavaScript source to Lua and executed the translated code on the very compact Lua virtual machine on the microprocessor of the device sounded like an epiphany.

Because of this Tessel was designed to be “node-compatible”, which meant it was able to run most of the modules straight out of npm, without much hassle (provided they didn’t have any binary dependencies — but that’s another story).

The concept above worked in some places, and failed in some others, but most of the time it got the job done — yet Tessel’s strong suit wasn’t its JavaScript compatibility or performance anyway: it was its plug-n-play nature. One could order pre-manufactured extension modules from the website and simply by typing the module identifier into an npm install command, they could access the additional hardware in 5 seconds via simple JavaScript call(back)s.

This kind of extensibility and modularity showcases the path outlined in the Extensible Web Manifesto — the basic idea “exposing low-level primitives for developers and letting them expand upon them in JavaScript code” beautifully demonstrated i.e. in Tessel’s Community modules directory.

Now before we march on to review Tessel’s second iteration, I think we should take a little detour back into FirefoxOS-land:

Firefox OS’s twisted twin brother: Jan OS

Well Jan OS is a strange animal! Invented by the crazy Dutch, Frankenstein of Amsterdam Jan Jongboom — it is neither a phone (any more), nor a microcontroller platform (yet) — but a bit of both.

Jan OS is based on Firefox OS’s open source code (it’s a fork, if you will), and focuses on the hardware and sensors. The same sensors and modules you could buy for hundreds of dollars as addons for your Tessel, here come pre-soldered to a highly compact and power-conscious IT board (that you got for a few tens of bucks, but back then they called it a phone).

With Firefox OS’s (dead-simple) hardware APIs are all right there, by stripping the unneeded cruft and the UI (GAIA) and security/permission mechanics (basically rooting the phone) you get a highly optimized, cellular-network-connected, low-power internet-of-things board. With an ample power supply you could, for example stuck your contraption out in the wild and have it take and upload photos wirelessly for a whole month!

What happens here is that you are basically running a slim, mobile-optimized Linux kernel (Firefox OS’s kernel, Gonk is based on the AOSP kernel), with Mozilla’s Gecko engine (including the SpiderMonkey JavaScript engine it comes with — with all optimizations, bells-n-whistles you would get on the mobile or desktop browser — hellooooh, Jaxer!), but with the addition of WebAPIs for accessing the mobile chipset, wifi, FM radio(!), sending SMS text messages, initiating calls or capturing gyroscope data!

Might I add, there is even support (in both Firefox OS and Jan OS) for the Raspberry Pi? That said, it should soon be fairly apparent why I chose to introduce Jan OS right before we talked about the Tessel 2.

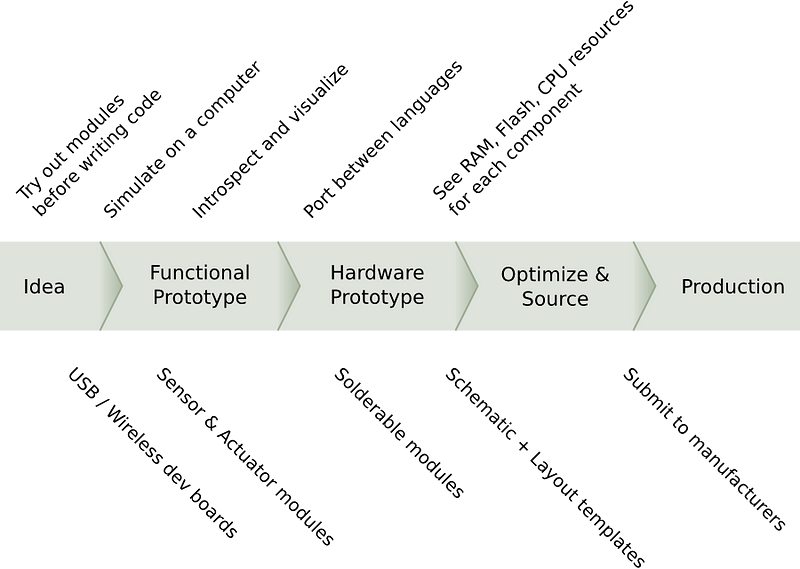

Tessel 2 — hardcore prototyping

The second iteration of Tessel positions itself on the sweet spot of all those hardware mentioned above: It is nearly as powerful as the Raspberry Pi, it is much cheaper than the original Tessel was (less than half the price!), it is just as modular and extensible as the first one was (heck, it even got better with the inclusion of two standard USB ports!) — and last but not least, runs a tiny Linux kernel and io.js, leveraging the full power, speed and ecosystem node/io.js has to offer, while doing away with compatibility issues once and for all!

Well that’s already nice — but the best thing is yet to come — you could use the Tessel 2 to prototype your product, and also to bring it to market — because if you wanted, you could order a bunch of pre-fabricated Tessel 2-s, with integrated module boards and put them right into your final product!

Technical Machines’ “Fractal” concept makes the scenario above even more tempting — taking modularity and prototyping to a whole new level, by using seamless interactions between JavaScript-driven and performance-sensitive close-to-the-metal compiled modules.

Performance-sensitive you say? Close to the metal? Let’s dive a bit further into the wizards’ den, look into what JavaScript’s future has to offer…

Way ahead of its time:

JavaScript Droids from the Future

ASM.js is not a new technology. Well — it’s not really even a technology, but rather a bunch of iterative optimizations that in the end just happened to be easily optimized and simply running really fast — it is more like a kind of guided evolution, if you wish. Already mentioned before some optimizations (like the AST) which help JavaScript performance-wise. The AST is used to speed up the interpreter and make runtime execution speedier — but nobody said that this is the only way JavaScript could be executed.

The keyword here is: compilation.

Just-in-time (JIT) compilers have been widely known and used since the earliest days of interpreted languages — and JavaScript is no different (however, for dynamically typed languages like JavaScript, JIT-ting does have its fare share of quirks and pitfalls).

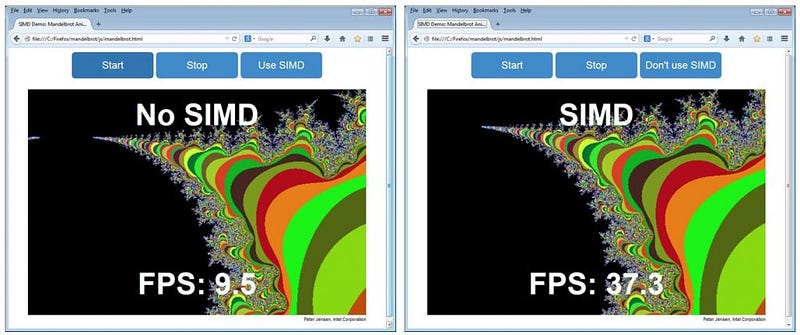

Optimizing hot code (like long loops or frequently called functions), by compiling it to directly executable machine code instructions could result in sizable gains in execution performance. What ASM.js tries to achieve is to make AOT (ahead of time) compilation of swaths of JS source possible by defining an intermediary JavaScript subset-syntax that makes it possible to compile the JavaScript source, well before execution. Compilation generates type-safe, readily executable machine code with predictable performance by eliminating managed memory and garbage collection altogether.

There is also a second, no less important goal to ASM.js — establishing JavaScript as a fast target-language. Compile-to-JS tools, like Emscripten or GWT have existed for quite some time before ASM.js, and as the habit of compiling low-level languages into JavaScript persisted, engines started optimizing for the kind of source code these compilers emitted. ASM.js is the natural continuation (I am trying hard not to overuse the word “evolution” as you might notice…) of this effort, by defining a common “language” (a standard syntax format) that makes the output of these tools comparable, more easily optimizable and perhaps a bit more readable (not that anyone would want to do that to themselves, anyway).

Look Ma’ — a time machine!

The results? Well, certainly awe-inducing — whether one talks about the thousands of MS-DOS games running nothing but a browser, thanks to archive.org’s collected library, or the wonders of 3D graphics that contemporary tools with built-in HTML5 (WebGL/JavaScript/ASM.js) output produce (like the Unreal engine or Unity):

With browser support expanding, these wonders are reaching more and more users on the web every day, bringing high-performance games and even physics simulation into browsers and to the open web.

However, speed is not the only concern. Sometimes size (see above, for the Espruino) is just as important (if not more important).

That is where our tiny script engines make an appearance.

TinyScript — the Davids to your

JavaScript Goliaths

Mentioned earlier, Lua has been readily and frequently used embedded in other software products to provide extensibility/scripting to its host software. This was partly due to its simplicity and extensibility, but also due to its small code & memory footprint.

Unfortunately for JavaScript (while being equally simple, quite extensible and certainly well-known) — size has been always an issue for its runtimes so it didn’t achieve widespread adoption… yet. Based on our learnings and assumptions of the Espruino engine, we would feel compelled to say that “a fast and standards-compliant JavaScript interpreter just cannot be squeezed into such a small footprint these applications would require” — but again, we would be wrong.

Fast-forward to 2015, enter muJS and Duktape — two tiny JavaScript interpreters built for just those purposes mentioned above! Clocking in at ~200KB compiled size, they are light-weight and easily embeddable in any product (or microcontroller, for that matter!): the Duktape engine could be slipped onto a small embedded system with 256KB of flash storage and as low as 96KB of RAM and would hum along nicely!

(Espruino’s (even Pico’s) hardware, on the other hand, would still prove too slim even for these tiny runtimes.)

Sporting ECMAScript standard compliance and all the bells-and-whistles expected of a modern JS-engine (garbage collector, unicode support, RegEx engine — you name it) these tiny powerhouses are no small feat of engineering. In spite of these being fairly new projects, some has already started incorporating them in their projects: Game engines, for example have frequented Lua for a long time catering for their in-game scripting needs — the new Atomic Game Engine on the other hand uses Duktape.

Duktape has also approached the bare metal in projects like the IoT framework “AllJoyn.js”, while Tilmann Scheller at Samsung evaluated the fitness of the engine for general use in embedded systems (with very promising results).

Takeways?

That concludes our journey up to JavaScript’s present and current directions — but what about the future, what does the future hold? It would be hard to guess, the landscape changes every second.

One thing seems pretty clear, though: JavaScript is not going away anytime soon — so one might as well learn a thing or two about it. Just in case.

Actually…

There is indeed one more thing. Seeing people on all over the web chanting the old slogan (even those who actually read the article above):

“Well JavaScript is still butt ugly & unusable,

it is a disgrace of a language and will surely remain so!”

To those people: not to worry, give it a few years and JavaScript is destined to be practically extinct — dead as a dodo!

Whaaat?!

After all this preaching why on Earth would anyone say that? The prologue itself literally says that “JavaScript is not going away anytime soon” — what about that? It might seem, that I am contradicting myself — I am truly sorry, but you will have to watch Gary Bernhardt’s prophetic talk to find out what is going on:

The Birth and Death of JavaScript

Hint: Steven Wittens’ previously linked article on ASM.js also has some pointers.

* in fact V8’s “Crankshaft” optimizing compiler has similar peculiarities that arise from code size because it chooses to inline functions based on their text-size (and that includes white-space and comments, too!).

To learn more about this, check out Joe McCann’s talk from dotJS 2014!