On Asm.js

Ending The Ice Age of JavaScript

The demo is striking: Unreal Engine, running live in a browser, powered by Mozilla’s Asm.js, offering near native performance with hardware accelerated graphics. A gasp here, a wow there, everyone is surely impressed. And look ma’, no plug-ins: just JavaScript, Web Audio and WebGL! Just don’t mind the 10 second hang on load as it’s Preparing JavaScript.

When I heard of it, it sounded great: a highly optimizable subset of JS, that can be dropped seamlessly into any existing code. It reminded me of Winamp’s charming evallib compiler, used in AVS and Milkdrop, which only did floating point expressions. It spawned a whole subculture of visual hackery based on little more than a dozen or so math functions and some clever graphics routines. It showed the power of being able to turn scripts into optimal machine code on the fly, and having a multimedia platform at your disposal while doing so.

But that’s not what Asm.js is for at all. It’s not for people, it’s a compiler target, a way of converting non-JS code into a form that browsers handle well. Its design is based on how JavaScript handles numbers: as 64-bit doubles, onto which you can perform select 32-bit integer operations. As such, Asm.js seems like a sweet hack similar to UTF-8, an elegant way of encoding something complicated under strong legacy constraints: typed arithmetic despite a single number type. Yet the part of me that remembers pushing pixels with MMX, that watched this web thing for more than a few years, can’t help but ask exactly what it is we’re trying to do here.

Asm.js deserves closer inspection for two reasons. First, it’s the one “native browser VM” that doesn’t massively reinvent wheels. Second, it’s the only time a browser vendor’s “next-gen JS” attempts have actually gotten everybody else to pay attention. But what are we transitioning into exactly?

LLVM to Asm

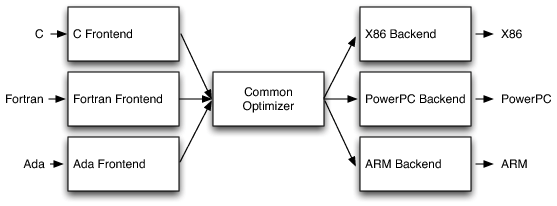

To understand Asm.js, you have to understand LLVM. Contrary to its name, it’s not really a Virtual Machine but rather a compiler. More precisely, it’s the modular core of a compiler, along with assorted tools and libraries. You plug in different front-ends to parse different languages (e.g. C or C++), and different back-ends to target different machines (e.g. Intel or ARM). LLVM can do common tasks like optimization in a platform and language agnostic way, and as such should probably be considered an open-source treasure.

LLVM architecture (source)

Per Atwood’s law, it was inevitable that someone decided the back-end should be JavaScript. Thus was born emscripten, turning C into JS—or indeed, anything native into JS. Because the output is tailored to how JS VMs work, this already gets you pretty far. The trick is that native code manages its own memory, creating a stack and heap. Hence you can output JS that just manipulates pre-allocated typed arrays as much as possible, and minimizes use of the garbage collector.

This works particularly well because JavaScript VMs already cheat when it comes to numbers and number types. They receive special treatment compared to other data. You can find a good overview in value representation in JavaScript implementations. The gist is that JS VMs handle floating point and integer arithmetic separately and efficiently, with lots of low level trickery to speed up computation. Modern VMs will furthermore try to identify code that uses one type only, and emit highly optimized type-specific code with only minimal checks at the boundaries. It’s this kind of code that emscripten can emit a lot of, e.g. translating the clang compiler into 48MB of JS.

Which brings us to the bittersweet hack of Asm.js. Once you realize that C can run ‘well’ as JavaScript even when the VM has to guess and juggle, imagine how much faster it could be when the VM is in on it.

What this means in practice is a directive “use asm” in a block of tailored code, along with implicit type annotations for arguments, casts and return values. Type casts are x|0 for int, +x for double. These annotations are parsed and validated, and optimized code is emitted for the entire code block. This doesn’t look bad at all. However, it also looks nothing like real Asm.js code in the wild.

Benchmarks show roughly a 1–2× slowdown compared to native code, significantly faster than normal JS. Hooray, JavaScript wins again, the web is awesome. Because of LLVM, an enormous piece of external, non-web infrastructure. Wait what?

Impostor Syndrome

When something bugs me that I can’t put my finger on, there’s usually a contradiction that I’m not seeing. After a few talks, articles and conversations, it seems pretty obvious: it puts JavaScript on a pedestal, even as it makes it irrelevant.

It makes JavaScript irrelevant because with LLVM’s infrastructure or similar tools, practically anything can or will be compiled into JS.

But it also makes JavaScript more important, focusing the future optimization efforts of browser makers onto it, despite its ill-suited semantics.

It means JavaScript has nothing to do with it, it’s just the poison we ended up with, the bitter pill we supposedly have to swallow. So when Brendan Eich says with a smile “Always bet on JavaScript”, what he really means is “Always bet on legacy code” or perhaps “Always bet on politics”. When you think about it, it’s weird to tell JavaScript developers about Asm.js. It’s not actually aimed at them.

Looking around, in the browser there’s CoffeeScript, TypeScript and Dart. Outside, there’s Python, Ruby, Go and Rust. Even CSS now has offspring. The future of the web is definitely multilingual and some people want to jump ship, they’re just not sure which one will actually sail yet.

When faced with a legacy mechanism like UTF-8 or indeed Asm.js, we have to ask, is it actually necessary? In the case of UTF-8, it’s a resounding yes: we need to assign unique names to things, and this name has to work with both modern and legacy software, passing through unharmed as much as possible. UTF-8 solves a bunch of problems while causing very few.

But with Asm.js, it’s just a nice to have. All Asm.js code is new, there is no vault of legacy code that will stop working if we do it wrong. We can already generate functioning JS for legacy browsers, along with something new for alternative VMs. Having one .js file that does both is merely a convenience, and a dubious one at that.

Indeed, the unique appeal of Asm.js is for the browser maker who implements it first: it lets their JS VM race closer to that much desired Native line. It also turns any demo that uses Asm.js into an instant benchmark, which other vendors have to catch up with. It’s a rational choice made in self interest, but also a tragedy of the commons.

Maybe that’s a bit hyperbolic, but work with me here. There’s a serious amount of defeatism and learned helplessness at work here, and again a contradiction. We seek to get ever closer to native performance, yet fall short by design, resigning ourselves to never quite reaching it. I can’t be the only one who finds that completely bizarre, when there’s laptops and phones running entirely on a web stack now?

If you look at the possible future of Asm.js, there’s SIMD extensions, long ints with value objects, math extensions, support for specific JVM/CLR instructions and more. Asm.js is positioned not just as something that works today, but that leads into a bright future to boot. And yet, it all has to be shoehorned into something that is still 100% JavaScript, even as that target itself consists of moving goalposts.

Part of Unreal Engine, JSified.

History Repeating

So fast forward a year or two. Firefox has completed its wishlist and Asm.js has filled its instruction set gaps. Meanwhile Chrome has continued to optimize V8. Will they have the same new language features that Firefox has? Will they officially support Asm.js? Or push Dart and PNaCl, expanding their influence through ChromeOS and Android? Your guess is as good as mine. As for IE and Safari, I’ll just pencil in “behind” for now and leave it at that.

But a certain phrase comes to mind: embrace and extend. From multiple fronts.

It looks like a future where your best bet to get things running fast in a browser is to do decidedly non-web things. You compile something like C to the different flavors of web at your disposal, either papering over their strengths, or tailoring for each individually. That’s not something I personally look forward to, as much as it might arouse Epic’s executives and shareholders today.

Web developers wouldn’t actually be working that differently. They might be using a multi-language framework like Angular, or dropping in a neat C physics library somebody cross compiled for them. I doubt they’ll have a nice web-native way to run the same performance-critical code everywhere. You’ll just waste some battery life because a computer pretended to be a JavaScript developer. For backwards compatibility with browsers that auto-update every few weeks. Eh?

I admit, I don’t know what the post-JS low level future should look like either, but it should probably be closer to LLJS‘s nicely struct’ed and typed order, than either the featherweight of Asm.js or the monolithic Flash-replacement that is PNaCl.

The big problem with Asm.js isn’t that it runs off script rather than bytecode, it’s that the code is generated to match how JS engines work rather than how CPUs compute. At best it will be replaced with something more sensible later or just fizz out as an optimization fad. At worst it’ll become the IA-32 of the web, still pretending to be an 8086 if asked to.

Looking ahead, there’s computation with WebCL, advanced GLSL shaders and more on the horizon. That’s a whole set of problems that can become much simpler when “browser” is a language that everyone can speak, to and from, rather than a weird write-only dialect built on a tower of Babel. We don’t just need a compilation target, we need a compilation source, as well as a universal intermediate representation.

And this is really the biggest contradiction of them all. Tons of people have invested countless hours to build these VMs, these new languages, these compilers, these optimizations. Yet somehow, they all seem to agree that it is impossible for them to sit down and define the most basic glue that binds their platforms, and implement a shared baseline for their many innovations.

We really should aim higher than a language frozen after 10 days, thawing slowly over 20 years.